Contact us: Email: AFEUS@afe-foundation.com

Artificial intelligence is rapidly reshaping the financial sector. This column introduces the seventh report in The Future of Banking series, which examines the fundamental transformations induced by AI and the policy challenges it raises. It focuses on three main themes: the use of AI in financial intermediation, central banking and policy, and regulatory challenges; the implications of data abundance and algorithmic trading for financial markets; and the effects of AI on corporate finance, contracting, and governance. While AI has the potential to improve efficiency, inclusion, and resilience across these domains, it also poses new vulnerabilities — ranging from inequities in access to systemic risks — that call for adaptive regulatory responses.

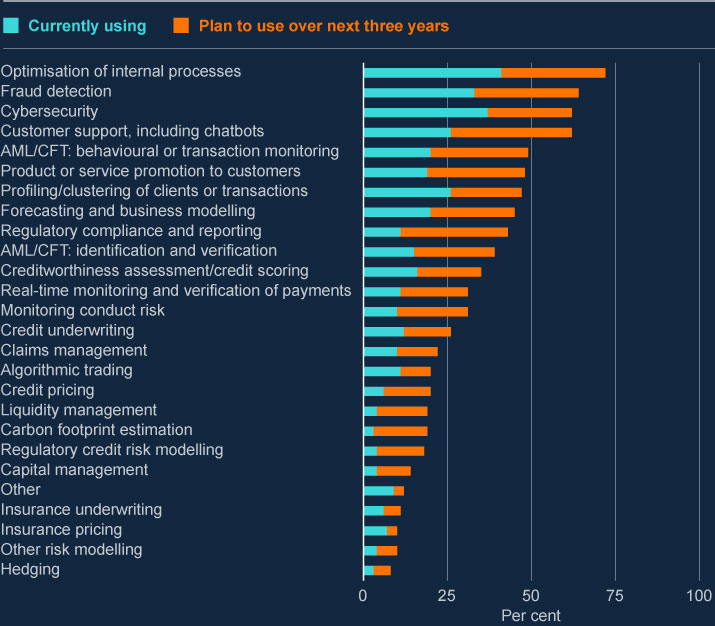

Artificial intelligence (AI), particularly generative AI (GenAI), is reshaping financial intermediation, asset management, payments, and insurance. Since the 2010s, machine learning (ML) has had a profound impact on various fields, including credit risk analysis, algorithmic trading, and anti-money laundering (AML) compliance. Nowadays, financial institutions are increasingly leveraging AI to streamline back-office operations, enhance customer support through chatbots, and improve risk management through predictive analytics. The fusion of finance and technology has become a transformative force, bringing both challenges and opportunities to financial practices and research (Carletti et al. 2020, Duffie et al. 2022).

In the financial sector, AI offers avenues for enhanced data analysis, risk management, and capital allocation. The big data revolution can result in significant welfare gains for consumers of financial services (households, firms, and government). However, there are risks that these gains might not be fully achieved because of market failures stemming from frictions in financial markets – asymmetric information, market power, and externalities – that AI may exacerbate or modify. As AI systems become more widespread, they introduce new challenges for regulators tasked with balancing the benefits of innovation with the need to maintain financial stability, market integrity, protect consumers, and ensure fair competition.

The risks associated with the use of AI/GenAI are extensive: privacy concerns and fairness issues (e.g. inducing undesirable discrimination, algorithmic bias of models from imperfect training data), security threats (e.g. facilitating cyberattacks or malicious output), intellectual property violations (e.g. infringing on legally protected materials), lack of explainability (e.g. uncertainty over how an answer is produced), reliability issues (e.g. stochastic outputs leading to hallucinations), and environmental impacts (e.g. CO2 emissions and water consumption).

Furthermore, AI introduces new sources of systemic risk. The opacity and lack of explainability of AI models make it difficult to anticipate or understand systemic risks until they materialise, underscoring the need for diversity in model design and robust stress-testing protocols. Additionally, the use of AI models may increase correlations in predictions and strategies, heightening the risk of flash crashes, amplified by the speed, complexity, and opacity of AI-driven trading. Finally, increasing returns to scale in AI services may lead to a concentrated market for some AI services to financial intermediaries (i.e., cloud services), increasing systemic risks.

The seventh report in The Future of Banking series, part of the Banking Initiative from the IESE Business School, discusses what is old and what is new in the use of AI in finance, examines the transformations and challenges that AI poses for the financial sector (in intermediation, markets, regulation and central banking), analyses the implications of data abundance and the new trading techniques in financial markets, and studies the implications of AI for corporate finance (Foucault et al. 2025). The report strives to highlight policy implications.